WIP.CX

Hacking the Docker Protocol to Build a Better Cloud Hosting

Docker is a piece of software that allows you to describe and run complete infrastructure with a few lines of YAML config, on any machine. You can run let's say MongoDB, Redis and your program with each having it's own isolated filesystem called a container.

It really feels like magic during development but gets very frustrating during deployment to production.

While Docker is used by 9 million+ developers, no major cloud providers provides native support for Docker deployment.

Forcing you into two options:

- Switch to Kubernetes: great for large, complex, multi-service platforms, but absolute overkill for small to medium teams. It turns simple deployments into a mountain of configuration, complexity, and operational overhead. While this works, it's also much slower.

- Maintain your own servers: rent a VPS or EC2, install Docker, patch, secure, and babysit everything yourself. This works... but at a cost. EC2s or equivalent aren't cheap, and neither is the engineering time needed to keep it running. Solutions like coolify.io are simplifying the deployment, but it doesn't solve the maintenance issue, you still need to update the OS and handle potential downtime yourself.

Other modern providers like fly.io and railway.com try to simplify this, but they come with their own constraints:

- No Docker Compose compatibility. You're forced to push one container at the time.

- New CLIs or UIs to learn. Steep learning curve.

- Opinionated workflows that force you to adapt your project to their system.

- Very biased towards you using their managed systems, which might not fit your needs.

Today, we're fixing this.

So anyone can deploy any compose file such as:

services:

gitlab:

image: gitlab/gitlab-ce:latest

hostname: "front.gitlab.wip.cx"

ports:

- "80:80" # change to "8080:80" if 80 is busy

labels:

com.wip.http: front:80

volumes:

- ./config:/etc/gitlab

- ./logs:/var/log/gitlab

- ./data:/var/opt/gitlab

environment:

GITLAB_OMNIBUS_CONFIG: |

external_url 'https://front.gitlab.wip.cx'

nginx['listen_https'] = false

nginx['redirect_http_to_https'] = false

nginx['listen_port'] = 80

nginx['listen_addresses'] = ['0.0.0.0']

To production, in a remote cloud environment, with a simple:

$ docker compose up -dAnd boom it's online.

- No Kubernetes overhead.

- No provider lock-in.

- No reinventing your workflow.

- No maintainance.

For a more practical example, check this video of me deploying Gitlab:

You like this idea?

Want to use it?

Register for the beta ― $20

You'll receive a mail within 24 hours, containing an early access to wip.cx beta.

This access allows you to deploy any project you want within the beta period.

You can also contact me directly by mail to get some help, suggest features, send feedback.

Let's build it

Docker follows a client–server architecture. The client can run locally while connecting to a remote Docker daemon (most of the time, not remote at all), and the two communicate through a REST API. (Check the API documentation)

So you could technically connect to a remote daemon to push to production. This setup isn’t commonly used in practice, though, since exposing the Docker Daemon API directly comes with major security risks and require a lot of extra configuration to make it barely enough secure.

But this can be used in our advantage. We can build an reverse proxy between the client and the daemon, that will let us intercept, inspect, modify, secure requests and authenticate each users to their project.

For those who don't know, a reverse proxy is a server, that for each request made from a client, will forward this request to a remote target server. And then serve the target server response as the response of the client request.

In our case, each request will go through this process:

Client (HTTPS) ⇌ Reverse Proxy (HTTP) ⇌ Docker Daemon (HTTP)This way, we get both the opportunity to modify the request before sending it to the remote server, or modifying the response before sending it back the client.

Docker is written in Go, so I'll be writting it in the same language. This will come handy for some features of the Docker API like the /grpc endpoint.

Here is my code for a basic HTTPS reverse proxy of a remote Docker Daemon:

const baseURL = "http://192.168.100.136:2375"

func performRequest(w http.ResponseWriter, r *http.Request, stream bool) {

user := GetUser(r)

fullURL := baseURL + r.URL.Path

if r.URL.RawQuery != "" {

fullURL += "?" + r.URL.RawQuery

}

log.Printf("%s %s", r.Method, fullURL)

var body io.Reader

if (r.Method == "POST" || r.Method == "PUT") && stream == false {

data, err := ioutil.ReadAll(r.Body)

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

body = bytes.NewReader(data)

}

req, err := http.NewRequest(r.Method, fullURL, body)

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

if ct := r.Header.Get("Content-Type"); ct != "" {

req.Header.Set("Content-Type", ct)

}

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

defer resp.Body.Close()

FilterAndCopyHeaders(w.Header(), resp.Header)

w.WriteHeader(resp.StatusCode)

if stream {

if _, err := io.Copy(w, resp.Body); err != nil {

color.Red("Error streaming response: %v", err)

}

} else {

data, err := ioutil.ReadAll(resp.Body)

if err != nil {

color.Red("Error reading response body: %v", err)

}

w.Write(data)

}

}

func main() {

r := mux.NewRouter()

RegisterContainersRoutes(r)

RegisterNetworksRoutes(r)

RegisterImagesRoutes(r)

RegisterVolumesRoutes(r)

r.HandleFunc("/{version}/info", func(w http.ResponseWriter, r *http.Request) {

performRequest(w, r, false)

}).Methods("GET")

r.HandleFunc("/version", func(w http.ResponseWriter, r *http.Request) {

performRequest(w, r, false)

}).Methods("GET")

r.HandleFunc("/{version}/version", func(w http.ResponseWriter, r *http.Request) {

performRequest(w, r, false)

}).Methods("GET")

r.HandleFunc("/_ping", func(w http.ResponseWriter, r *http.Request) {

performRequest(w, r, false)

}).Methods("GET", "HEAD")

r.HandleFunc("/{version}/events", func(w http.ResponseWriter, r *http.Request) {

performRequest(w, r, true)

}).Methods("GET")

r.HandleFunc("/df", func(w http.ResponseWriter, r *http.Request) {

performRequest(w, r, false)

}).Methods("GET")

r.PathPrefix("/grpc").HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

...

})

logged := handlers.LoggingHandler(os.Stdout, r)

log.Println("Starting server on :5000")

http.ListenAndServe(":5000",

h2c.NewHandler(logged, &http2.Server{}),

)

}

The performRequest function mirrors the user's request to the Docker daemon. Instead of blindly forwarding every request, we explicitly define the routes in Go. This way, we avoid any unexpected behavior.

Functions like RegisterContainersRoutes, RegisterNetworksRoutes, and others follow the same pattern are setting up routes that rely on performRequest.

Once this is running, you can point your Docker client to the proxy:

$ export DOCKER_HOST=tcp://<ip>:5000

$ docker compose up -d

From the client's perspective, it feels identical to connecting directly to the Docker daemon.

Adding authentication

Now that we have a working reverse proxy, we need to start identifying users to make sure each won't interfere with others.

The Docker client don't support any way to attach a custom header or value to be sent to the backend, making things tricky.

To get around this, I’m using a little hack I originally borrowed from malware developers: leveraging DNS resolution as a transport layer for data.

Here’s how it works: if you configure the A record for *.wip.cx to always resolve to your server, then any request like hello-world.wip.cx will point to you. When an HTTP request is made, the Host header isn't the resolved IP, it’s the original domain name. So, if a client connects to hello-world-123.wip.cx, the server actually receives:

GET / HTTP/1.1

Host: hello-world-123.wip.cx

That means we can encode an API key directly into the hostname. For example:

H7eryXJVrbau8UFrYVcDtO8a47foFc.wip.cxOn the server side, we just parse the Host value to extract the key:

func GetUser(r *http.Request) string {

var user string

if r.Host != "" {

parts := strings.Split(r.Host, ".")

if len(parts) > 0 {

user = parts[0]

} else {

color.Red("Error extracting user")

return ""

}

} else {

color.Red("Bad Host")

return ""

}

return user

}This approach works surprisingly well, though it’s far from secure. DNS lookups aren’t encrypted, so the token gets exposed to every resolver and intermediary along the way. Still, as a lightweight workaround, it does the job.

A (naive) plan for isolation

Now that we have a working proxy with authentication, we need some way to isolate users from each other. The simplest (though naive) approach is to intercept requests and rename resources behind the scenes.

Whenever a user creates a container or pulls an image, we can replace the user’s chosen name with a UUID to avoid collisions. The mapping between the original name and the UUID is stored in Redis. Later, when a user lists containers or images, we resolve the UUIDs back to their friendly names, keeping the illusion intact.

This isn't bulletproof, there’s plenty of code to write, and leaks are possible, but it’s enough to get a PoC working.

Here's how I handle image pulls:

r.HandleFunc("/{version}/images/create", func(w http.ResponseWriter, r *http.Request) {

fullURL := baseURL + r.URL.Path

if r.URL.RawQuery != "" {

fullURL += "?" + r.URL.RawQuery

}

log.Printf("%s %s", r.Method, fullURL)

for name, values := range r.Header {

log.Printf("%s: %v", name, values)

}

rdb, ctx, err := ConnectToRedis()

if err != nil {

color.Red("Error connecting to Redis: %v", err)

http.Error(w, "Error connecting to Redis", http.StatusInternalServerError)

return

}

defer rdb.Close()

fromImage := r.URL.Query().Get("fromImage")

tag := r.URL.Query().Get("tag")

if tag == "" {

tag = "latest"

}

if strings.HasPrefix(fromImage, "docker.io/") {

fromImage = strings.TrimPrefix(fromImage, "docker.io/")

}

org, name, _ := ImageSplitName(fromImage)

fmt.Printf("Org: %s, Name: %s, Tag: %s\n", org, name, tag)

user := GetUser(r)

key := user + ":images:public:" + org + ":" + name + ":" + tag

log.Printf("Key: %s", key)

err = rdb.Set(ctx, key, true, 0).Err()

if err != nil {

color.Red("Error setting fromImage for image from Redis: %v", err)

http.Error(w, err.Error(), http.StatusNotFound)

return

}

req, err := http.NewRequest(r.Method, fullURL, r.Body)

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

// Preserve Content-Type if present

if ct := r.Header.Get("Content-Type"); ct != "" {

req.Header.Set("Content-Type", ct)

}

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

defer resp.Body.Close()

FilterAndCopyHeaders(w.Header(), resp.Header)

w.WriteHeader(resp.StatusCode)

if _, err := io.Copy(w, resp.Body); err != nil {

color.Red("Error streaming response: %v", err)

}

}).Methods("POST")This request is responsible for downloading an image from the Docker Hub and sending back logs in text that the client display as user feedback for progression.

fromImage contains the image name to pull, such as mcp/postgres. org here is "mcp" and name is "postgres". fromImage can also be specified as postgres only, so that's why it's not a simple split.

By using an UUID on the daemon instead of the Docker Hub name, we make sure that even if multiple users pull the same image, they are getting stored as different images on our side, avoiding name collisions or supply chain exploitations.

But once you start renaming resources, you have to keep the illusion going everywhere. For example, when listing images, we fetch the Docker daemon's response, rewrite the names using the mappings in Redis, and strip out any images that don't belong to the user:

r.HandleFunc("/{version}/images/json", func(w http.ResponseWriter, r *http.Request) {

fullURL := baseURL + r.URL.Path

if r.URL.RawQuery != "" {

fullURL += "?" + r.URL.RawQuery

}

log.Printf("%s %s", r.Method, fullURL)

for name, values := range r.Header {

log.Printf("%s: %v", name, values)

}

req, err := http.NewRequest(r.Method, fullURL, nil)

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

// Preserve Content-Type if present

if ct := r.Header.Get("Content-Type"); ct != "" {

req.Header.Set("Content-Type", ct)

}

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

defer resp.Body.Close()

FilterAndCopyHeaders(w.Header(), resp.Header)

w.WriteHeader(resp.StatusCode)

data, err := ioutil.ReadAll(resp.Body)

if err != nil {

color.Red("Error reading response body: %v", err)

}

log.Printf("Response Data: %s", string(data))

rdb, ctx, err := ConnectToRedis()

if err != nil {

color.Red("Error connecting to Redis: %v", err)

http.Error(w, "Error connecting to Redis", http.StatusInternalServerError)

return

}

defer rdb.Close()

result := make([]interface{}, 0)

user := GetUser(r)

// Parse the incoming data as JSON array since it's an array of images

var images []map[string]interface{}

if err := json.Unmarshal(data, &images); err != nil {

color.Red("Error unmarshalling JSON: %v", err)

http.Error(w, "Error processing response", http.StatusInternalServerError)

return

}

for _, image := range images {

repoTags, ok := image["RepoTags"].([]interface{})

if !ok {

continue

}

for _, repo := range repoTags {

repoName, ok := repo.(string)

if !ok {

continue

}

org, name, tag := ImageSplitName(repoName)

log.Printf("Org: %s, Name: %s, Tag: %s", org, name, tag)

key := user + ":images:public:" + org + ":" + name + ":" + tag

log.Printf("Key: %s", key)

value, err := rdb.Get(ctx, key).Result()

log.Printf("Value: %+v, Error: %+v", value, err)

if err == nil && value == "1" {

result = append(result, image)

log.Printf("Append: %+v", image)

break

}

key = user + ":images:dockername:" + name + ":name"

log.Printf("Key: %s", key)

value, err = rdb.Get(ctx, key).Result()

log.Printf("Value: %+v, Error: %+v", value, err)

if err == nil && value != "" {

n := ""

if org != "" {

n += org + "/"

}

n += value

if tag != "" {

n += ":" + tag

}

log.Printf("repoTags: %s", n)

image["RepoTags"] = []string{n}

result = append(result, image)

log.Printf("Append: %+v", image)

break

}

}

}

encodedData, err := json.Marshal(result)

if err != nil {

color.Red("Error marshalling JSON: %v", err)

http.Error(w, "Error processing response", http.StatusInternalServerError)

return

}

w.Write(encodedData)

}).Methods("GET")Apply the same strategy to containers, networks, and volumes, and you get a surprisingly convincing multi-tenant setup:

$ DOCKER_HOST=tq3b2bn77198uBwKq2px.wip.cx docker-compose up -d

$ DOCKER_HOST=tq3b2bn77198uBwKq2px.wip.cx docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e9c306b1738d moby/buildkit:buildx-stable-1 "buildkitd --root /t…" 5 weeks ago Restarting (1) 47 seconds ago buildx_buildkit_tmpfs-builder0

...

$ DOCKER_HOST=c87vKZzKSW573c5jHesZ.wip.cx docker ps

Each user sees only their own environment, even though everything is running on the same Docker daemon.

Of course, if alarms aren’t going off in your head yet, let me be clear: while this trick works fine for a prototype, building true isolation in a cloud service is far more complex. This approach is a start, but a very naive one.

Security, security, security.

Okay, let's get back on Earth. The biggest weakness of this system is also its greatest strength. By running everything on a single Docker daemon, you get the best possible performance per unit of hardware, but you also make the system extremely insecure.

By using a single Docker deamon, all containers shares the same kernel, but are isolated through a kernel feature called namespaces. Because of this, a user could exploit a vulnerability in the kernel from its container and would get kernel privilege on all other user containers, and drop to host filesystem.

This is one of the fundamental challenges of running a cloud service, and it’s why major providers like AWS, Azure and Google have heavily invested in solving it.

The standard approach is run a kernel per user using VMs, and to layer different isolation mechanisms. Most providers also uses a sandboxing mechanism on top depending of the service. AWS built Firecracker for their Lambda service and their need for short-lived workloads (firecracker-microvm.github.io). Platforms like Fly.io use it as well, as they describe in their excellent post on sandboxing and workload isolation.

That said, I have concerns about Firecracker’s performance compared to QEMU (https://hocus.dev/blog/qemu-vs-firecracker/). Firecracker seems heavily optimized for short lived workloads which is not my case here, and the article linked is mentionning that it don't ever give back ram. Where QEMU has been battle-tested for years and is highly optimized, so it wouldn’t be surprising if it outperforms Firecracker in more classic workload type.

Still, layering containers on top of VMs is far safer than sharing a single kernel across all users. But it’s not perfect, KVM itself has vulnerabilities, and host escapes remain possible. In the end, nothing replaces strong policies and true hardware-level isolation.

But by combining both, it gets very hard. You first need to exploit the kernel or the Docker daemon, and then exploit the VM hypervisor, to drop to host. Not unlikely, but not enough likely for the hole industry to not rely on it.

New Plan

So we need to add an extra layer of VM, the simplest way for this is to have VM per user that runs a dedicated Docker endpoint for each. The proxy would then both redirect to the correct Docker endpoint, and start / stop / create the VMs on need and completely transparent for the user.

The flow for each request looks like this:

- while(vm_mutex);

- vm_mutex = true;

- If the VM doesn't exists, create one

- If the VM is stopped, start it

- the vm boots up with Docker

- Forward the request to VM -> Docker Endpoint

- vm_mutex = false;

So if two concurrent requests are being made while VM is not booted, the first one will check and start the VM, and the second one will wait the first one to finish before checking.

VM Management

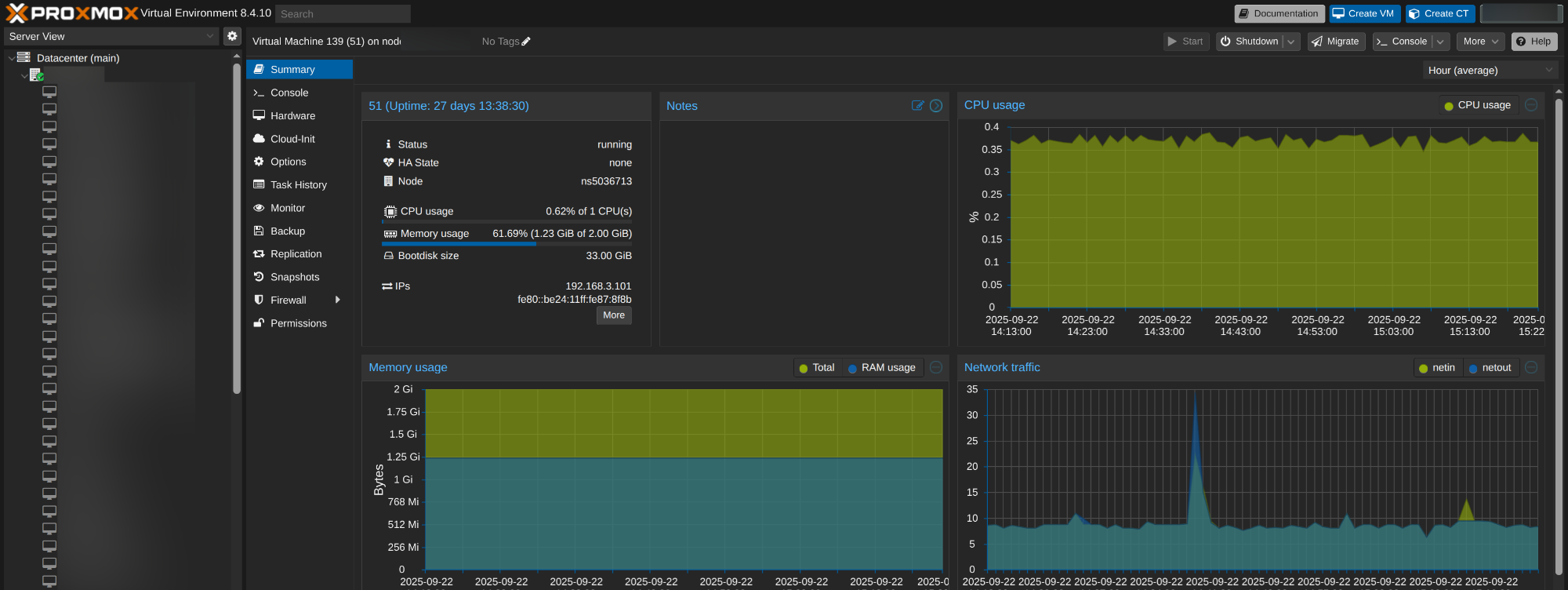

Having a VM per user also means more ressource usage and managing those VMs. This increase in usage means to scale to a few hundred users, we have no choice than building a system that would scale to multiple hosts. This is a challenge, but fortunately, it’s also a well-solved one. Tools like OpenStack, and the one I’m using here Proxmox make it straightforward to manage VM lifecycles in production.

Proxmox lets you build clusters of machines and create VMs on demand through a single API. To make sure every VM is reachable across nodes from my proxy entrypoint, I connect the cluster together with a WireGuard network.

With that in place, I added a few Go functions to my codebase for talking to the Proxmox API:

VMExists/VMNameExists→ check if a VM already existsVMClone→ check if a VM already existsVMStart/VMStop→ check if a VM already exists

To keep boot times fast, I created a minimal Debian image with only Docker installed. I also enabled qemu-agent, which lets me query the VM’s IP address, once it’s up. The starting time including creation takes about 5 seconds.

Here’s how I spin up a Docker endpoint when a user connects:

func waitForPort2375(ip net.IP) string {

for {

conn, err := net.DialTimeout("tcp", fmt.Sprintf("%s:2375", ip.String()), time.Second)

if err == nil {

conn.Close()

fmt.Println("Port 2375 is reachable!")

break

}

fmt.Println("Waiting for port 2375 to be reachable...")

time.Sleep(1 * time.Second)

}

return fmt.Sprintf("http://%s:2375", ip.String())

}

func createDockerEndpoint(name string) string {

ctx, cancel := context.WithTimeout(context.Background(), 12*time.Minute)

defer cancel()

api := &apiClient{

Base: strings.TrimRight(apiURL, "/"),

Auth: authHeader,

HTTP: &http.Client{Transport: insecureTR(), Timeout: 60 * time.Second},

}

exists, vmid, status, err := VMNameExists(api, name)

if err != nil {

panic(err)

}

log.Printf("VM exists")

var ip net.IP

if exists {

if status == "stopped" {

log.Printf("Starting it")

VMStart(ctx, api, vmid)

}

ip, err = getGuestAgentIPs(ctx, api, vmid)

if err != nil {

panic(err)

}

log.Printf("IP: %s", ip)

} else {

ip, err = CloneFromTemplate(ctx, api, name)

if err != nil {

panic(err)

}

log.Printf("IP: %s", ip)

}

return waitForPort2375(ip)

}

Here I consider the endpoint available once the port 2375 is reachable. Once the endpoint is available, we handle each request made to the proxy by forwarding it to the corresponding user VM:

func performRequest(w http.ResponseWriter, r *http.Request, stream bool) {

// endpoint := getEndpoint(w, r)

user := GetUser(r)

endpoint := createDockerEndpoint(user)

log.Printf("Endpoint: %s", endpoint)

fullURL := endpoint + r.URL.Path

if r.URL.RawQuery != "" {

fullURL += "?" + r.URL.RawQuery

}

log.Printf("%s %s", r.Method, fullURL)

/*

for name, values := range r.Header {

log.Printf("%s: %v", name, values)

}

*/

var body io.Reader

if (r.Method == "POST" || r.Method == "PUT") && stream == false {

data, err := ioutil.ReadAll(r.Body)

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

// log.Printf("BODY: %s", string(data))

body = bytes.NewReader(data)

}

req, err := http.NewRequest(r.Method, fullURL, body)

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

// Preserve Content-Type if present

if ct := r.Header.Get("Content-Type"); ct != "" {

req.Header.Set("Content-Type", ct)

}

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

http.Error(w, err.Error(), http.StatusInternalServerError)

return

}

defer resp.Body.Close()

FilterAndCopyHeaders(w.Header(), resp.Header)

w.WriteHeader(resp.StatusCode)

if stream {

if _, err := io.Copy(w, resp.Body); err != nil {

color.Red("Error streaming response: %v", err)

}

} else {

data, err := ioutil.ReadAll(resp.Body)

if err != nil {

color.Red("Error reading response body: %v", err)

}

w.Write(data)

}

}

For each requests, the checking sequence is ran and returns the Docker endpoint to use for the current user.

This allows us to have a double layer isolation mechanism, while keeping the illusion intact for the Docker client. From the client perceptive, this is just a standard Docker endpoint.

You can't escape Docker

Docker by default allows any user through the API to use dangerous features that could be used to escape out of the container environment to the host (VM) filesystem.

To make sure our system is really double layer security, we need to prevent this from happening. Luckely, most of the parameters enabling / disabling those features are on the container creation endpoint.

To mitigate this, I’m rewriting the request body before it reaches the corresponding user Docker Daemon, ensuring users can’t sneak in configuration that would let them escape the container environment:

postData := map[string]interface{}{

"Hostname": data["Hostname"],

"Domainname": data["Domainname"],

"User": data["User"],

"AttachStdin": data["AttachStdin"],

"AttachStdout": data["AttachStdout"],

"AttachStderr": data["AttachStderr"],

"Tty": data["Tty"],

"OpenStdin": data["OpenStdin"],

"StdinOnce": data["StdinOnce"],

"Env": data["Env"],

"Cmd": data["Cmd"],

"Image": imageName,

"Volumes": data["Volumes"],

"WorkingDir": data["WorkingDir"],

"Entrypoint": data["Entrypoint"],

"OnBuild": data["OnBuild"],

"Labels": data["Labels"],

"HostConfig": map[string]interface{}{

"Mounts": make([]string, 0),

"Binds": make([]string, 0),

"ContainerIDFile": "",

"LogConfig": map[string]interface{}{

"Type": "",

"Config": nil,

},

"NetworkMode": nil, // Any value of "host" (or binding to another container’s namespace) breaks isolation.

"PortBindings": map[string]interface{}{}, // Port binding is dangerous

"RestartPolicy": map[string]interface{}{

"Name": "no",

"MaximumRetryCount": 0,

},

"AutoRemove": false,

"VolumeDriver": "",

"VolumesFrom": nil,

"ConsoleSize": []int{60, 243},

/*

"CapAdd": []string{ // can be used to escape the container

"CAP_CHOWN", // Needed for chown, install scripts

"CAP_DAC_OVERRIDE",// Read files with stricter permissions

"CAP_FOWNER", // Required for some file ownership changes

"CAP_SETGID", // Change group ID inside container

"CAP_SETUID", // Change user ID inside container

"CAP_NET_BIND_SERVICE", // Bind to ports <1024 (may still be restricted)

},

"CapDrop": []string{

"CAP_SYS_ADMIN",

"CAP_NET_ADMIN",

"CAP_SYS_MODULE",

"CAP_SYS_PTRACE",

},

*/

"CapAdd": nil,

"CapDrop": nil,

"CgroupnsMode": "",

"Dns": make([]string, 0),

"DnsOptions": make([]string, 0),

"DnsSearch": make([]string, 0),

"ExtraHosts": make([]string, 0),

"GroupAdd": nil,

"IpcMode": "", // Any value of "host" (or binding to another container’s namespace) breaks isolation.

"Cgroup": "",

"Links": nil,

"OomScoreAdj": 0,

"PidMode": "", // Any value of "host" (or binding to another container’s namespace) breaks isolation.

"Privileged": false,

"PublishAllPorts": false,

"ReadonlyRootfs": false,

"SecurityOpt": nil, // Of course

"UTSMode": "", // Any value of "host" (or binding to another container’s namespace) breaks isolation.

"UsernsMode": "", // If you allow untrusted users to pick a user ns mapping, they could remap root back to 0.

"ShmSize": 0,

"Isolation": "",

"CgroupParent": "",

"Devices": nil,

"DeviceCgroupRules": nil,

"DeviceRequests": nil,

"OomKillDisable": false,

"Ulimits": nil,

"IOMaximumIOps": 0,

"IOMaximumBandwidth": 0,

/*

"MaskedPaths": []string{

"/proc/kcore",

"/proc/latency_stats",

"/proc/timer_list",

"/proc/sched_debug",

"/sys/firmware",

"/proc/scsi",

},

"ReadonlyPaths": []string{

"/proc/asound",

"/proc/bus",

"/proc/fs",

"/proc/irq",

"/proc/sys",

"/proc/sysrq-trigger",

"/sys",

},

*/

"MaskedPaths": nil,

"ReadonlyPaths": nil,

"CpuShares": 0,

"NanoCpus": config["NanoCpus"],

"CpuPeriod": 0,

"CpuQuota": 0,

"CpuRealtimePeriod": 0,

"CpuRealtimeRuntime": 0,

"CpusetCpus": "",

"CpusetMems": "",

"CpuCount": 0,

"CpuPercent": 0,

"Memory": config["Memory"],

"MemorySwap": config["Memory"], // Disable Swap

"MemorySwappiness": 0, // Disable Swap

"MemoryReservation": 0,

"PidsLimit": config["PidsLimit"],

"BlkioWeight": 0,

"BlkioWeightDevice": nil,

"BlkioDeviceReadBps": nil,

"BlkioDeviceWriteBps": nil,

"BlkioDeviceReadIOps": nil,

"BlkioDeviceWriteIOps": nil,

"Runtime": "runc",

},

"NetworkingConfig": map[string]interface{}{

"EndpointsConfig": data["NetworkingConfig_EndpointsConfig"],

},

}

You can see that I allow everything appart what's under HostConfig.

Most of those keys allows a user to enable special permissions relative to the host on it's container.

Options like privileged: true and CapAdd containing CAP_SYS_ADMIN are particulary dangerous for our case. Both gives root level rights to the container on the kernel.

Mounts and volumes can also be abused to leak files such as /etc/passwd.

Going past pure Docker

If you take a look at the request mentionned earlier, you can see a key named Labels.

This is a dictionnary that is passed with the values from the --label argument on docker run or from the labels section in docker-compose.yml:

services:

web:

image: nginx:alpine

labels:

com.example.description: "A simple nginx container"

com.example.version: "1.0.0"

com.example.environment: "production"

ports:

- "80:80"And those values are then passed without any modification or validation to the request body. And this is fantastic opportunity for us to implement features based on this.

From there users can specify container specific settings. The first one I implemented is an automatic HTTPS proxy, allowing to redirect HTTPS requests made to wip.cx main node to a specific container set by the user. Allowing to expose any container port to internet through HTTP.

We can do this by adding a condition on specific label such as:

services:

web:

image: nginx:alpine

ports:

- 80:8080

labels:

com.wip.http: "www.deadf00d.com:8080"

By using ports here you expose nginx from the VM, and then by specifying a domain to redirect from and to which port using the com.wip.http label.

As a reverse proxy I'm going Traefik, as it already has a redis middleware that allow me to push configs from my Go code very easily:

parsedValues := []map[string]string{}

if value != "" {

entries := strings.Split(value, ",")

for _, entry := range entries {

parts := strings.Split(entry, ":")

if len(parts) == 2 {

host := strings.TrimSpace(parts[0])

port := strings.TrimSpace(parts[1])

if _, err := strconv.Atoi(port); err == nil {

entryMap := map[string]string{

"host": host,

"port": port,

}

parsedValues = append(parsedValues, entryMap)

} else {

log.Printf("Skipping entry with invalid port: %s", entry)

}

} else {

log.Printf("Skipping invalid entry: %s", entry)

}

}

}

for _, value := range parsedValues {

key := user + "-" + strings.ReplaceAll(var_name, "/", "_")

keys, err := rdb.Keys(ctx, "traefik/http/routers/"+key+"/*").Result()

if err != nil {

color.Red("Error finding keys to delete: %v", err)

http.Error(w, "Error finding keys to delete", http.StatusInternalServerError)

return

}

for _, key := range keys {

err := rdb.Del(ctx, key).Err()

if err != nil {

color.Red("Error deleting Redis key: %v", err)

http.Error(w, "Error deleting Redis key", http.StatusInternalServerError)

return

}

}

keyRouter := "traefik/http/routers/" + key + "/rule"

if !strings.Contains(value["host"], ".") {

value["host"] = value["host"] + "." + user + ".wip.cx"

}

err = rdb.Set(ctx, keyRouter, "Host(`"+value["host"]+"`)", 0).Err()

if err != nil {

color.Red("Error setting Redis key for router rule: %v", err)

http.Error(w, "Error setting Redis key for router rule", http.StatusInternalServerError)

return

}

keyEntryPoints := "traefik/http/routers/" + key + "/entrypoints"

err = rdb.Set(ctx, keyEntryPoints, "websecure", 0).Err()

if err != nil {

color.Red("Error setting Redis key for entrypoints: %v", err)

http.Error(w, "Error setting Redis key for entrypoints", http.StatusInternalServerError)

return

}

keyTLS := "traefik/http/routers/" + key + "/tls/certresolver"

err = rdb.Set(ctx, keyTLS, "le", 0).Err()

if err != nil {

color.Red("Error setting Redis key for TLS certresolver: %v", err)

http.Error(w, "Error setting Redis key for TLS certresolver", http.StatusInternalServerError)

return

}

keyService := "traefik/http/routers/" + key + "/service"

err = rdb.Set(ctx, keyService, "svc-"+key, 0).Err()

if err != nil {

color.Red("Error setting Redis key for router service: %v", err)

http.Error(w, "Error setting Redis key for router service", http.StatusInternalServerError)

return

}

parsedEndpoint, err := url.Parse(endpoint)

if err != nil {

color.Red("Error parsing endpoint URL: %v", err)

http.Error(w, "Error parsing endpoint URL", http.StatusInternalServerError)

return

}

keyLoadBalancer := "traefik/http/services/svc-" + key + "/loadbalancer/servers/0/url"

err = rdb.Set(ctx, keyLoadBalancer, "http://"+parsedEndpoint.Hostname()+":"+value["port"], 0).Err()

if err != nil {

color.Red("Error setting Redis key for load balancer server: %v", err)

http.Error(w, "Error setting Redis key for load balancer server", http.StatusInternalServerError)

return

}

}This way, once you specify a com.wip.http label, a traefik config gets pushed, and you just have to add a CNAME from your domain to wip.cx.

And boom! You're online on your domain name!

We can apply this to future features, and the possibilities are endless, regional deployment, load balancing, TLS level proxy, etc.

Full test and demo

As a demo, I'm deploying gitlab using their docker-compose:

services:

gitlab:

image: gitlab/gitlab-ce:latest

hostname: "front.gitlab.wip.cx"

ports:

- "80:80" # change to "8080:80" if 80 is busy

labels:

com.wip.http: front:80

volumes:

- ./config:/etc/gitlab

- ./logs:/var/log/gitlab

- ./data:/var/opt/gitlab

environment:

GITLAB_OMNIBUS_CONFIG: |

external_url 'https://front.gitlab.wip.cx'

nginx['listen_https'] = false

nginx['redirect_http_to_https'] = false

nginx['listen_port'] = 80

nginx['listen_addresses'] = ['0.0.0.0']

Here, I'm using a feature of the com.wip.cx label, by just specifying a keyword, it will bind the container to <keyword>.<project>.wip.cx, allowing users to put things online without having to configure a domain name right away.

Last words

To finish, this product has been the work of about a month for me. I've been using daily ever since, I really think it's the best and easiest way I've been putting things to production, ever.

If you think this is solving a problem for you, please register for the beta, or contact me on my website.

Maurice-Michel Didelot

https://www.deadf00d.com/

You like this idea?

Want to use it?

Register for the beta ― $20

You'll receive a mail within 24 hours, containing an early access to wip.cx beta.

This access allows you to deploy any project you want within the beta period.

You can also contact me directly by mail to get some help, suggest features, send feedback.